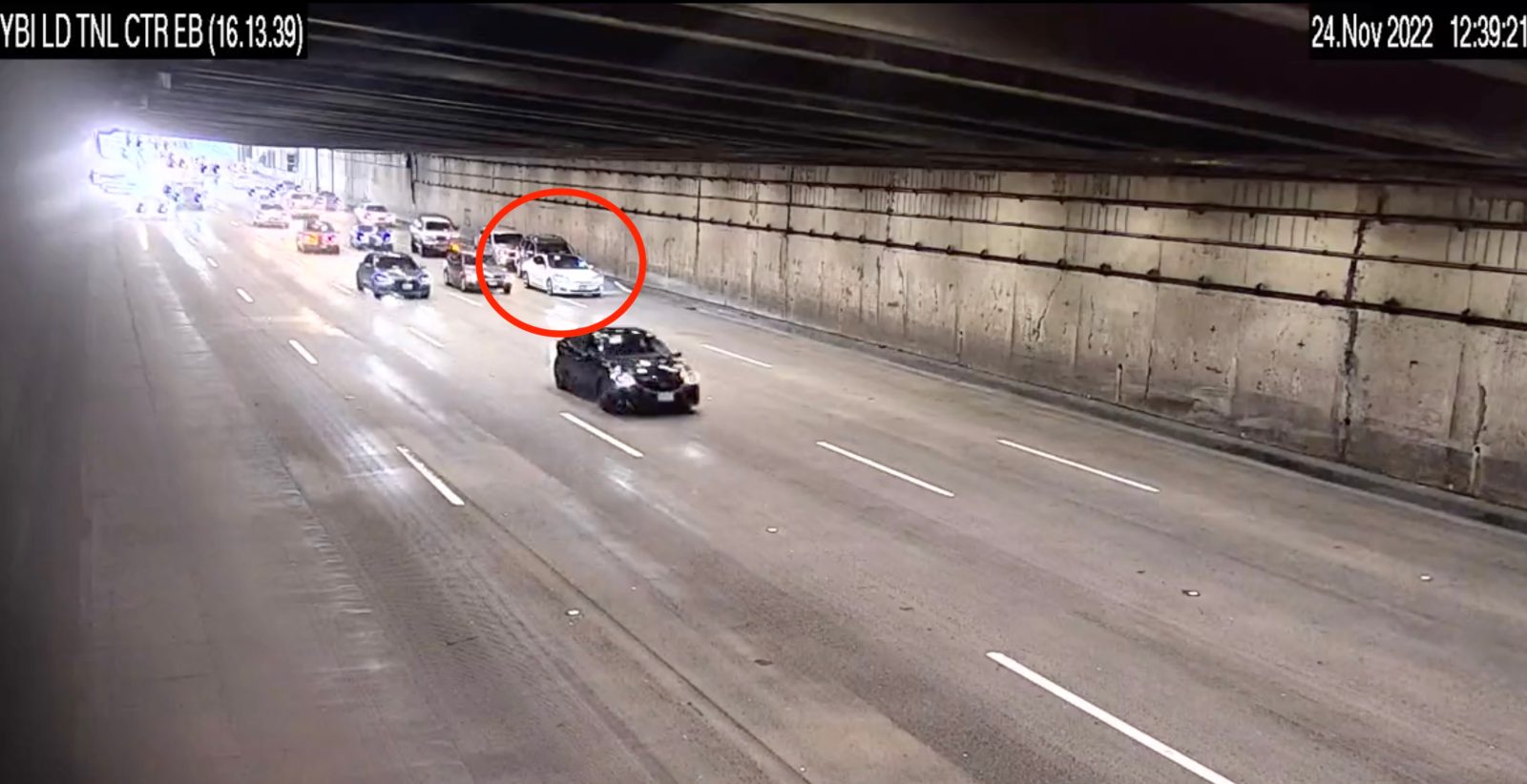

Footage of the Tesla vehicle allegedly on “Full Self-Driving” that triggered an eight-car crash pile-up in San Francisco in November has emerged.

It appears to present a typical circumstance of phantom braking but also all through Level 2 autonomy, the driver ought to have responded.

In November, an 8-auto pile-up on the San Francisco Bay Bridge created the information soon after ensuing in some minor accidents and blocking the visitors for over an hour.

But the headline was that the motor vehicle that brought about it was reportedly a “Tesla on self-driving method,” or at minimum which is what the driver told the law enforcement.

Now, as we know, Tesla does not have a “self-driving method.” It has a little something it calls the “Full Self-Driving package deal,” which now consists of Total Self-Driving Beta or FSD Beta.

FSD Beta enables Tesla autos to drive autonomously to a location entered in the car’s navigation procedure, but the driver needs to keep on being vigilant and ready to acquire regulate at all situations.

Considering the fact that the accountability rests with the driver and not Tesla’s procedure, it is even now regarded a amount-two driver-assist process, in spite of its title.

Next the incident, the driver of the Tesla advised the law enforcement that the vehicle was in “Full self-driving mode,” but the police seemed to fully grasp the nuances in the incident report:

P-1 mentioned V-1 was in Total self-driving mode at the time of the crash, I am unable to validate if V-1’s Whole 24 Self-Driving Functionality was active at the time of the crash. On 11/24/2022, the newest Tesla Complete Self 25 Driving Beta Edition was 11 and is labeled as SAE Intemational Level 2. SAE Worldwide Level2 is 26 not categorised as an autonomous automobile. Below Amount 2 classification, the human in the driver seat must 27 continuously supervise assist features including steering, braking, or accelerating as necessary to sustain 28 safety. If the FullSelfDriving Capacity application malfunctioned, P-1 should really of manually taken management of 29 V-1 by above-using the FullSelf Driving Functionality feature.

Now The Intercept has attained footage of the incident, and it obviously exhibits the Tesla vehicle abruptly coming to a quit for no clear cause:

This phenomenon is generally referred to as “phantom braking,” and it has been identified to happen somewhat frequently on Tesla Autopilot and FSD Beta.

Back again in November of 2021, Electrek launched a report identified as “Tesla has a really serious phantom braking trouble in Autopilot.” It highlighted a major improve in Tesla entrepreneurs reporting harmful phantom braking activities on Autopilot.

This challenge was not new in Tesla’s Autopilot, but our report concentrated on Tesla motorists noticing an obvious increase in scenarios primarily based on anecdotal proof, but it was also backed by a clear enhance in grievances to the NHTSA. Our report made the rounds in a several other shops, but the challenge did not genuinely go mainstream until The Washington Submit released a identical report in February 2022. A couple months later on, NHTSA opened an investigation into the make a difference.

FTC: We use profits earning vehicle affiliate hyperlinks. A lot more.